Niklas Rönnberg

Introduction

I work as an associate professor in Sound technology at the division for Media and Information Technology, Department for Science and Technology, Linköping University. I am the unit manager of the Information Visualization group, and I do research about sonification, and I teach media technology courses with focus on sound and sound technology.

Here you'll find my university web-site: https://liu.se/en/employee/nikro27.

I believe that it is a stimulating challenge to write and to design materials, may it be scientific articles, user interfaces, student texts, or user manuals, that manage to bring forward the intended message despite any possible shortcomings in the communicative environment.

I love music, and the music has always been an important part of my life, from when I was a child and “only” listened to music, to the first lines of code that made my C64 computer beep, until today when I am listening to and creating music everyday. I have deep interests in sound technology and sound design. My educational background is in Media and Communication studies, and my PhD is in Technical audiology (Medical sciences).

In my spare time I work with music and sound engineering, designing electronics for analogue synthesis and programming (anything from JavaScript via Matlab to C-like Arduino microcontrollers), when I am not building Lego with my kids or out running in the nature.

My weekly work schedule

Most of my work related activities are marked in this schedule to the left, however there might be appointments that are not marked in the schedule.

Please come by my office to see if I'm available, or give me a call or send me an email. You find the contact information here: Contact

Legend

- Miscellaneous work, meetings & conferences

- TNM113 - Procedural Sound Design for User Interfaces

- TNM107 - Scientific methods

- TNM103 - Sound technology

- TDDE39 - Physical Interaction Design and Prototyping

- TNMK30 - Electronic publishing

- TNGD44 - Image Production

- TNGD23 - Scientific methods

- TNM088 - Digital media

- TNM091 - Media Production for Immersive Environments

- TQGD10 - GDK Diploma work seminar serie

How to contact me

Zoom-link

https://liu-se.zoom.us/my/niklasronnberg

Postal address

Linköping University

581 83 LINKÖPING

SWEDEN

Phone

+46 11 36 30 73 &

+46 700 89 60 53

Visiting address

Room 2011

Kopparhammaren 2

Norra Grytsgatan

Campus Norrköping

Courses I teach

Current courses

I teach courses for the Master of Science in Media Technology study program as well as for the Bachelor in Graphic Design and Communication study program at Linköping University. See below for more information.

Invited guest lecturer

- Stuttgart Media University, Germany, November 2022

- Karel de Grote, Antwerpen, Belgien, May 2019

- Stuttgart Media University, Germany, September 2018

- Stuttgart Media University, Germany, May 2018

- The Royal Institute of Technology(KTH), Stockholm, December 2017

- Stuttgart Media University, Germany, November 2017

- Mälardalen university, Eskilstuna augusti - september 2017

- Stuttgart Media University, Germany, May 2017

- Stuttgart Media University, Germany, November 2015

- University of the West of Scotland, UK, September 2015

- Stuttgart Media University, Germany, november 2007 - januari 2008

Old courses

Some courses I've been involved in during the years:

- 2005-2008 - TNMU12 - Music production in an international perspective, 9hp

- 2003-2008 - TNMU04, TNMU09, TNMU16 - Musik production 2, studio and instruments, 12hp

- 2003-2007 - TNMU03, TNMU07, TNMU13 - Music production 1, an introduction, 12hp

- 1999-2006 - TNMK03 - Interactive media, 7.5hp

- 2002-2006 - TNMK07 - Linear media, 7.5hp

- 2004-2006 - TNMU06 - Music production 3, arrangement and sound, 10.5hp

- 2004-2006 - TNMU10, TNMU17 - Music production 4, sound and music in visual applications, 10.5hp

- 2000-2005 - TNM035 - Digital images and internet technology, 7.5hp

- 2003-2003 - TNMU05 - Recording technology, 3hp

- 2003-2003 - ETE205 – Sound- and Video-production, 7.5hp

- 1999-2001 - TNM023 - Music in interactive media, 6hp

TNM103 - Sound technology, 6 ECTS credits.

This course aims to provide students with the fundamentals of digital audio techniques for processing, analyzing and synthesizing sound. After completion of the course, the student will acquire basic knowledge in the design of signal processing applications for professional audio, computer music and sound effects.

TNM107 - Scientific methods, 6 ECTS credits.

This course aims to provide students with the knowledge necessary for making a scientific sound and academic master thesis. After completion of the course the student will be able to evaluate texts with respect to scientific and engineering standards, select and evaluate relevant scientific and engineering methods, formulate a scientific text using an academic standard and criticize a plan for a scientific study. The student will also be able to critically evaluate scientific works, seek information about and evaluate references in their own topic area, and assess and manage ethical issues and societal aspects of science and engineering in their topic area.

TNM113 - Procedural Sound Design for User Interfaces, 6 ECTS credits.

The course aims to provide students with knowledge about sound as a form of communication, the principles of using sound in interaction design and methods for designing and creating procedural sound. Briefly, the course content is: Sound and communication theory, sound design, game sound, sonification, sound technology, and methods for procedural sound.

TNMK30 - Electronic Publishing, 6 ECTS credits.

The course focuses on methods for content design in electronic publishing. Fundamental markup languages for content design, i.e. HTML5. Introduction to the client-server model and script languages, i.e. JavaScripts. Introduction to structured layout and design in electronic publishing, i.e. CSS3 style sheets. Introduction to programming for electronic publishing and distributed services, e.g. dynamic information and web pages using PHP. Short introduction to databases, MySQL, and their application in storage and retrieval of dynamic information. As well as social and economic aspects of the use of digital media.

TNGD10 - Video Production, 6 ECTS credits.

The course is not given anymore.

The course aims to provide basic knowledge of dramaturgical storytelling using motion pictures and sound, focusing on giving an basic understanding of the parameters that control the impact of our minds through these narrative elements. The course also aims to introduce script writing and storyboard, of video camera technology, and the fundamentals of video editing. A critical position towards how storytelling with motion pictures are done, what is shown in the videos, and why this is shown. Even though the course lends on a theoretical platform from classical narrative film, the aim of the course is to give the means of being able to use these narrative tools for commercials, informational films, or infotainment.

Curriculum vitae

I like being challenged. I enjoy when I need to figure out how to solve a task, to find the information needed to do so, and then to present a solution that gets the job done, is efficient, and also (if possible) beautiful. Regardless if the task to be solved is an audiological test to be developed in Matlab, or how to best explain a gas detector in written text, or how to teach the best way to record an electric guitar. I think communicating is fun and interesting, and doing that orally, in text, or with images is absolutely necessary if you want to continue to evolve and spread your ideas and visions. Irrespective of how well the task is solved, it certainly needs to be packed and delivered in a good and understandable way if it ever is going to succeed. And I guess that has shaped my background, from an educational point of view as well as work wise.

Educational background

PhD, Linköping university 2009-2014

My PhD is in Technical audiology (Medical sciences), and my main research interest for the PhD study was hearing in noise, cognitive abilities, and hearing aid rehabilitation.

- HEAD seminars, 5hp - 2014

- Participating conferences, 3hp - 2014

- Cognition, 7.5hp - 2013

- Peripheral auditory system, 3hp - 2011

- Scientific communication and information retrieval, 3hp - 2011

- Bioethics and research ethics, 3hp - 2010

- Scientific methodology, 5hp - 2010

- Basic biostatistics, 5hp - 2010

- Foundation course; Disability research and interdisciplinarity, 10.5hp - 2010

- Hearing and deafness - an introduction to the field, 7.5hp - 2010

Courses, Linköping university 1992-1999

The focus of my studies is media and communication studies, with elements of behavioral science, theoretical philosophy and sociology. My master’s thesis in communication studies, “The Language of Emotions”, considered music as means of communication and my bachelor thesis in media and communication studies, “Music as an Extra Expression”, explained the film music composers’ work and their communication with the audience and the director. Both reports were written when I was studying at the Department for Thematic Studies, Communication, Linköping university.

- Webpublishing: Technology, design and communication, 7.5hp - 2015

- Masters program at Department for Thematic Studies, Communication - 1997-1998

- Introduction to advanced communication studies, 7.5hp

- Methods in communication studies, 7.5hp

- Master thesis, 30hp

- Selected topics in communication studies, 7.5hp

- Indivdualized course in communication studies, 7.5hp

- Media and society, 30hp - 1997

- Audio/video-production in practice, 15hp - 1997

- Television and radio production, 12hp - 1996

- Media science - 1995-1996

- New media and information technology, 7.5hp

- Media studies, project, 10.5hp

- Form and content inmodern media, 7.5hp

- The communicative practice of media, 7.5hp

- Oral and written communication, 7.5hp

- Media in perspective, 7.5hp

- Media studies, motion pictures and television, 7.5hp

- History 1, 30hp - 1995

- Theoretical philosophy 2 with emphasis on the philosophy of science, 30hp - 1994

- Sociology 2, 30hp - 1994

- Theoretical philosophy 1 with emphasis on the philosophy of science, 30hp - 1993

- Basic course in behavioural sciences, 60hp - 1993

Research interests

Research interests and thoughts

In conjunction with the Department of Science and Technology (ITN) at Campus Norrköping is the Visualization Center C. In the Visualization Center advanced research and development are conducted, and many results are presented to the public through popular scientific visualizations. Much of the research done in connection to the Visualization Center is focused on Visualization and Computer Graphics. Today, there is a lack of research involving sound, sound design, and communication.

Sonification of data sets

Here you'll find the university web-site about musical sonification: https://liu.se/en/research/sonification.

In addition, research at ITN in information visualization, i.e. how to best visualize the datasets so that the context and circumstances are best illustrated. Even in this research are currently lacking a connection to audio and sonification of visual systems. Research has shown that the sound can be used to simplify the interpretation of data and improve performance in the analysis data. However, today there is no consensus on how sound can best be used to facilitate data and information visualization.

Sound could be used for example to show the density in a data set, or to identify and distinguish between different datasets. It should be possible to achieve by changing the sound's frequency content, intensity, and timbre. Furthermore, it should be using sound positioning going to show information not currently visible on the screen, and help the viewer to navigate in the dataset. For example, the sound could mark a correlation that occurs outside the picture, if the viewer has zoomed into the dataset.

Sound for immersive environments

Sound and music are important for how we experience an image, a movie, or a situation. Sound affects our experience in different ways, from the contextual and cultural to the physiological. Sound might, for example, affect us subconsciously by the sound frequency range, tempo or meter, and the sound amplitude level. Therefore it is important to take various sound elements into consideration when designing a visualisation for a particular communicative purpose. It is also important to have knowledge about how humans perceive sound, from how the hearing system functions to how the brain receives sound and processes these to create understanding, to successfully design and use sound in the immersive environment of the dome theatre.

In the environment in the dome theatre at the Visualization Center, both frequency range and sound level dynamics might be utilised when using sound in the presentations held there. A further tool is the positioning of the sound, as the dome theatre is equipped with an advanced multi-channel system. The challenge is that the dome theatre is very technically advanced and that the design principles that apply to other media can not be directly transferred to the environment in the dome theatre. Moreover, currently the knowledge how sounds can and should be designed and used to further enhance the impression and simplify the understanding of the presentations given in the dome theatre is inadequate. This research would fill a gap in the knowledge, and complement the research already held in cooperation with the Visualization Center today.

PhD study

Over the years as a doctoral student at Linköping University, I did research in the interdisciplinary research field of cognitive hearing science, which covers the areas of cognitive psychology, audiology, and technology. I mainly focused my studies on speech perception in noise, working memory and listening effort. But I also studied the frequency discrimination, temporal resolution and speech perception and spatial skills.

My main research during the PhD studies was focused on how the cognitive abilities, working memory capacity and executive function of updating in working memory, interacted with speech understanding in noise. Usually an individual with hearing loss is rehabilitated with hearing aid(s). But if two individuals with the same hearing loss (at least according to their audiograms) have the same type of hearing aid, and this hearing aid is adjusted equally for both individuals, they are most likely to perform differently well at a speech-in-noise test. Furthermore, they will have different benefit from hearing aids, and they will experience different degrees of listening effort. Many studies have shown that cognitive abilities are important for an individual's ability to understand speech in a noisy / disruptive environment; particularly working capacity correlates with the amount of noise in which the individual may still perceive the information in speech. No measure of cognitive abilities is used today in the process of adapting a hearing aid. The aim of the research was to see if we could use the depleted memory as an objective measure of listening effort. This degree of effort could be used as a measure of how well a hearing aid was fitted for the individual. The hypothesis was that when an individual needs to commit more cognitive resources to hearing speech in noise less spare capacity is available to remember the information in the speech. By measuring memory capacity in different signal-to-noise ratios (SNR) it was assumed that a negative effect of decreased SNR would appear on memory performance, with poorer memory performance in worse SNR, and that this would demonstrate a greater degree of effort. To test this hypothesis, I developed a combined test (the Auditory Inference Span Test, AIST) of speech perception in noise, and memory. I also used two tests of the individual's cognitive ability, namely working memory capacity (through a version of the Reading Span Test) and update capability (through a variation of the Letter Memory test). Furthermore, I also tested the ability to understand speech in noise, and developed a test of subjectively perceived effort of speech perception in noise. Initially, the pilot studies, were developed using JavaScript and PHP, to easily reach participants via the Internet. After the pilots, when the actual data collection started I did on all tests in Matlab for testing in a fully controlled environment. Two studies were then made with young adults with normal hearing. The first of these studies evaluated the AIST as a test of the memory effect of noise, while the second study tested the difference between different types of noise (steady state noise, amplitude modulated noise, and noise containing voices). Then I conducted a study in which I used a variant of this test battery, but on older adults with hearing loss. The objective of the last study was to evaluate how age and hearing interact with speech understanding in noise and memory capacity. This research is the basis of my thesis and the results suggest that memory is affected by the SNR, impaired memory performance in difficult listening conditions.

During the PhD studies, I also developed a fairly extensive test battery, in Matlab, to examine and measure the ability to distinguish between different frequencies. As stated above, an individual with hearing loss is usually rehabilitated with hearing aid(s). If the hearing loss is too severe for a hearing aid, cochlear implant (CI) might be used. CI is a fantastic tool that can provide access to sound for individuals who 20-30 years ago would have been considered deaf. However, many of the individuals implanted with a CI still founds it hard to hear the difference between different emotional expressions (as irony) or the difference between questions and statements. One reason for this is poorer frequency response, as well as worse ability to hear the temporal fine structure due to the sound processing in the CI. These two factors deteriorate the ability to distinguish between frequencies. An alternative to the CI might be electro-acoustic stimulation (EAS), which is a combination of hearing aid and CI, where the hearing aid amplifies the lower frequencies while the CI transmits the higher frequencies. Thanks to the acoustic amplification in the hearing aid, the fine structure in the lower frequencies is maintained, which should be helpful for frequency discrimination. I was asked by one of the surgeons at Linköping University Hospital if I could develop a test that could measure the ability to hear and distinguish between frequencies, which could be used for comparing different hearing aid rehabilitations. I developed a test of frequency discrimination by synthesizing an a-like sound, with a frequency shift upwards or downwards. I made two variants of this test. One test changed the frequency of the entire sound, while the second test used a sliding change. Two additional variants of the test used recorded speech. Furthermore, I adapted two tests of temporal resolution: one test in which the subject to perceive short breaks in a speech weighted noise, as well as a test in which the subject to perceive amplitude modulation in a speech weighted noise. Furthermore, I developed four versions of a test of perception of tonal pitch accent - to be able to hear the difference between the tomten (which comes on Christmas Eve) and tomten (where the house stands). Finally, I added yet two more tests of linguistic ability, and tests of speech perception in noise, spatial release of masking, as well as cognitive tests of working memory and executive function of inhibition.

Bachelor and master thesis

My bachelor thesis in Media Studies, "Music as an additional expression," explained the film music composers' work and their communication with the audience and director. I worked with a qualitative approach and did, in addition to a literature review, interviews with four composers of film music. I used this data to compile a list of musical tools for analyzing film music and its impact on the audience experience. My master's thesis in Communication Sciences, "the language of emotions", treated music as a communication form. In this study, I used a qualitative approach and studied with the help of literature, experiments and interviews how music could be used as a form of communication. These data formed the basis for designing a communication model where music was the medium of communication. Difficulties in communication with music was also discussed on the basis of contextual, knowledge and cultural diversity.

Publications

2025

- Rönnberg, N. (2025). Towards Sonification for Accessibility in Public Transport. In Proceedings of the International Conference on Auditory Display, pp. .

- A. Fredriksson, L. Eriksson, N. Rönnberg (Redaktörskap) (2025). Den störningsfria staden: En antologi om bygglogistik, stads- och trafikplanering

- N. Rönnberg (2025). Framtida möjligheter. Den störningsfria staden: en antologi om bygglogistik, stads- och trafikplanering, s. 65-75 (Kapitel i bok, del av antologi)

2024

- N. Rönnberg & J. Löwgren (2024). Understanding Modal Synergy for Exploration. In Proceedings of the Audioviusal Symposium 2024 , Dalarna Audiovisual Academy (DAVA), Dalarna University, Falun, Sweden. 6 December 2024

- Elmquist, E., Bock, A., Ynnerman, A., Rönnberg, N. (2024) Towards a Systematic Scene Analysis Framework for Audiovisual Data Representations. In Proceedings of the Audioviusal Symposium 2024 , Dalarna Audiovisual Academy (DAVA), Dalarna University, Falun, Sweden. 6 December 2024

- N. Rönnberg & A. Börütecene (2024). Use of Generative AI for Fictional Field Studies in Design Courses. In Adjunct Proceedings of the 2024 Nordic Conference on Human-Computer Interaction, pp. 1-5.

- E. Elmquist, M. Ejdbo, A. Bock, D. S. Thaler, A. Ynnerman, N. Rönnberg (2024). Birdsongification: Contextual and Complementary Sonification for Biology Visualization. In Proceedings of the International Conference on Auditory Display, pp. 34-41.

- K. Enge, E. Elmquist, V. Caiola, N. Rönnberg, A. Rind, M. Iber, S. Lenzi, F. Lan, R. Höldrich, W. Aigner (2024). Open Your Ears and Take a Look: A State‐of‐the‐Art Report on the Integration of Sonification and Visualization. Computer graphics forum, Vol. 43, Article e15114.

- N. Rönnberg (2024). Where Visualization Fails, Sonification Speaks. In Proceedings of EuroVis 2024, pp. 1-3.

- I. Gorenko, L. Besançon, C. Forsell, N. Rönnberg (2024). Supporting Astrophysical Visualization with Sonification. In Proceedings of EuroVis 2024, pp. 1-3.

- E. Elmquist, K. Enge, A. Rind, C. Navarra, R. Höldrich, M. Iber, A. Bock, A. Ynnerman, W. Aigner, N. Rönnberg (2024). Parallel Chords: an audio-visual analytics design for parallel coordinates. Personal and Ubiquitous Computing, pp. 1-20.

2023

- T. Ziemer, S. Lenzi, N. Rönnberg (2023). Introduction to the special issue on design and perception of interactive sonification. Journal on Multimodal User Interfaces, Vol. 17, pp. 213-214.

- N. Rönnberg, R. Ringdahl, A. Fredriksson (2023). Measurement and sonification of construction site noise and particle pollution data. Smart and Sustainable Built Environment, Vol. 12, no 4, pp. 742-764.

- E. Elmquist, A. Bock, J. Lundberg, A. Ynnerman, N. Rönnberg (2023). SonAir: the design of a sonification of radar data for air traffic control. Journal on Multimodal User Interfaces, Vol. 17, no 3, p. 137-149.

2022

- N. Rönnberg, R. Ringdahl, A. Fredriksson (2022). Measurement and sonification of construction site noise and particle pollution data. Smart and Sustainable Built Environment, 12, 4, pp. 742-764, 2023. DOI: 10.1108/SASBE-11-2021-0189.

- Svensson, Å., Rönnberg, N. (2022) Expected teamwork attributes between human operator and automation in air traffic control. In Proc. of the 33rd Congress of the International Council of the Aeronautical Sciences (ICAS2022).

- Fredriksson Häägg, A., Weil, C., Rönnberg, N. (2022). Insight-based Evaluation of a Map-based Dashboard. In Proc. of the 26 International Conference Information Visualisation.

- Rönnberg,N., Forsell, C. (2022). Questionnaires assessing usability of audio-visual representations. In Proc. of the AVI 2022 Workshop on Audio-Visual Analytics (WAVA22), Frascati, Rome, Italy, 7 June 2022. DOI: 10.5281/zenodo.6555676

- Svoronos-Kanavas, I., Agiomyrgianakis, V., Rönnberg, N. (2022). An exploratory use of audiovisual displays on oceanographic data. In Proc. of the AVI 2022 Workshop on Audio-Visual Analytics (WAVA22), Frascati, Rome, Italy, 7 June 2022. DOI: 10.5281/zenodo.6555839

- Aigner, W., Enge, K., Iber, M., Rind, A., Elmqvist, N., Höldrich, R., Rönnberg, N., Walker, B. (2022). Workshop on Audio-Visual Analytics. In Proc. of the 2022 International Conference on Advanced Visual Interfaces (AVI 2022), June 6–10, 2022, Frascati, Rome, Italy. DOI: 10.1145/3531073.3535252.

2021

- Elmquist, E., Ejdbo, M., Bock, A., Rönnberg, N. (2021). OpenSpace sonification: Complementing visualization of the solar system with sound. In Proc. of the 26th International Conference on Auditory Display (ICAD 2021), June 25 -28 2021, Virtual Conference, pp. 137-142. DOI: 10.21785/icad2021.018

- N. Rönnberg (2021). Sonification for Conveying Data and Emotion. In Proceedings of AM'21: Audio Mostly 2021, September 2021, virtual/Trento, Italy, pp. 56-63. DOI: 10.1145/3478384.3478387

- Å. Svensson, J. Lundberg, C. Forsell, & N. Rönnberg (2021). Automation, teamwork, and the feared loss of safety: Air traffic controllers’ experiences and expectations on current and future ATM systems. In Proceedings of the 32nd European Conference on Cognitive Ergonomics (ECCE 2021), April 26-29, Siena, Italy 2021 (pp. 1-8). DOI: 10.1145/3452853.3452855

- Rönnberg, N., & Löwgren, J. (2021). Designing the user experience of musical sonification in public and semi-public spaces. SoundEffects - An Interdisciplinary Journal of Sound and Sound Experience, 10(1), 125-141. DOI: 10.7146/se.v10i1.124202

2020

- L. Besançon, N. Rönnberg, J. Löwgren, J.P. Tennant, & M. Cooper (2020) Open up: a survey on open and non-anonymized peer reviewing. Research Integrity and Peer Review 5, 8. DOI: 10.1186/s41073-020-00094-z

2019

- N. Rönnberg (2019). Towards Interactive Sonification in Monitoring of Dynamic Processes. In Proceedings of the Nordic Sound and Music Computing Conference 2019 (NSMC2019) and the Interactive Sonification Workshop 2019 (ISON2019), November 18-20, 2019, Stockholm, Sweden, pp 92-99.

- N. Rönnberg (2019). Musical Elements in Sonification Support Visual Perception. In Proceedings of the 31st European Conference on Cognitive Ergonomics (ECCE 2019), September 10-13, 2019, Belfast, United Kingdom, pp 114-117. DOI: 3335082.3335097.

- N. Rönnberg (2019). Musical sonification supports visual discrimination of color intensity. Behaviour & Information Technology, 38, 10, 1028--1037 DOI: 10.1080/0144929X.2019.1657952.

- N. Rönnberg (2019). Sonification supports perception of brightness contrast. Journal on Multimodal User Interfaces,pp. 1–9, 7, 2019.

- N. Rönnberg & J. Löwgren (2019). Traces of Modal Synergy. Studying Interactive Musical Sonification of Images in General-Audience Use. In Proc. of the 25th International Conference on Auditory Display (ICAD), June 23-27, 2019, Northumbria University, Newcastle-upon-Tyne, UK, pp 199-206.

- K. Akram Hassan, Y. Liu, L. Besançon, J. Johansson, & N. Rönnberg (2019). A Study on Visual Representations for Active Plant Wall Data Analysis. Data, 4(2), 74.

- K. Akram Hassan, N. Rönnberg, C. Forsell, M. Cooper, & J. Johansson (2019). A Study on Parallel Coordinates for Pattern Identification in Temporal Multivariate Data. In Proc. of Information Visualization (IV23).

2018

- N. Rönnberg & J. Löwgren (2018). Photone: Exploring modal synergy in photographic images and music. In Proc. of the 24th International Conference on Auditory Display (ICAD), June 10-15, 2018, Michigan Technological University, Houghton, MI, USA, pp 73-79.

2017

- N. Rönnberg (2017). Sonification Enhances Perception of Color Intensity. IEEE VIS Infovis Posters, 2017.

- K. Akram Hassan, J. Johansson, C. Forsell, M. Cooper, & N. Rönnberg (2017). On the Use of Parallel Coordinates for Temporal Multivariate Data. IEEE VIS Infovis Posters, 2017.

2016

- N. Rönnberg & J. Johansson (2016). Interactive Sonification for Visual Dense Data Displays. Proceedings of ISon 2016, 5th Interactive Sonification Workshop, CITEC, Bielefeld University, Germany, December 16, 2016.

- N. Rönnberg, J. Lundberg, & J. Löwgren (2016). Sonifying the Periphery: Supporting the Formation of Gestalt in Air Traffic Control. Proceedings of ISon 2016, 5th Interactive Sonification Workshop, CITEC, Bielefeld University, Germany, December 16, 2016.

- M. Nylin & N. Rönnberg (2016). A New Modality for Air Traffic Control. The Sixth SESAR Innovation Days, poster, 2016

- Kahin Akram Hassan, N. Rönnberg, C. Forsell, & J. Johansson (2016). On the Performance of Stereoscopic Versus Monoscopic 3D Parallel Coordinates. IEEE VIS Infovis Posters, 2016

- N. Rönnberg, G. Hallström, T. Erlandsson, & J. Johansson (2016). Sonification Support for Information Visualization Dense Data Displays. IEEE VIS Infovis Posters, 2016

- N. Rönnberg & J. Löwgren (2016). The Sound Challenge to Visualization Design Research. Proceedings of EmoVis 2016, ACM IUI 2016 Workshop on Emotion and Visualization, Sonoma, CA, USA, March 10, 2016.

2014

- N. Rönnberg, M. Rudner, T. Lunner, & S. Stenfelt (2015). Assessing listening effort by measuring short-term memory storage and processing of speech in noise. Speech, Language and Hearing, 17(3), 123-132.

- N. Rönnberg, M. Rudner, T. Lunner, & S. Stenfelt (2015). Memory performance on the Auditory Inference Span Test is independent of background noise type for young adults with normal hearing at high speech intelligibility. Frontiers in Psychology, 5, 1490.

2013

- N. Rönnberg, M. Rudner, T. Lunner, & S. Stenfelt (2013). Frequency discrimination and human communication

2012

- N. Rönnberg, M. Rudner, T. Lunner, & S. Stenfelt (2012). Testing listening effort for speech comprehension. Speech Perception and Auditory Disorders, Danavox Jubilee Foundation, 2012, 73-80

- N. Rönnberg, S. Stenfelt, M. Rudner, T. Lunner (2012). Att mäta lyssningsansträngning

2011

- N. Rönnberg, S. Stenfelt, & M. Rudner (2011). Testing listening effort for speech comprehension using the individuals’ cognitive spare capacity. Audiology Research, 1(1S). doi: 10.4081/audiores.2011.e22.

- M. Rudner, Elaine E. H. Ng, N. Rönnberg, S. Mishra, J. Rönnberg, T. Lunner, & S. Stenfelt (2011). Cognitive spare capacity as a measure of listening effort. Journal of Hearing Science, 11, 47-49.

- N. Rönnberg, M. Rudner, T. Lunner, & S. Stenfelt (2011). Adverse listening conditions affect short-term memory storage and processing of speech for older adults with hearing impairment.

- B. Larsby & N. Rönnberg (2011). STAF-dagar i Uppsala: Mycket om kopplingen audiologi och otokirurgi, Audionytt, ISSN 0347-6308, Vol. 38, no 3, 31-33

- N. Rönnberg, S. Stenfelt, M. Rudner, & T. Lunner (2011). An objective measure of listening effort: The Auditory Inference Span Test

- N. Rönnberg, S. Stenfelt, & M. Rudner (2011). AIST - Ett test av lyssningsansträngning

- N. Rönnberg, S. Stenfelt, M. Rudner, & T. Lunner (2011). Testing listening effort for speech comprehension

- M. Rudner, Elaine E. H. Ng, N. Rönnberg, S. Mishra, J. Rönnberg, T. Lunner, & S. Stenfelt (2011). Understanding auditory effort by measuring cognitive spare capacity

2010

- N. Rönnberg, S. Stenfelt, & M. Rudner (2010) Testing effort for speech comprehension using the individuals’ cognitive spare capacity - the Auditory Inference Span test

- N. Rönnberg, S. Stenfelt, & M. Rudner (2010) The Auditory Inference Span Test – Developing a test for cognitive aspects of listening effort for speech comprehension

Miscellaneous thoughts, ideas, or things I do for fun

The following are small projects I do just for the fun of it. Some are interesting, other might be good, others just plain useless. Some will be code ideas, and some will be hardware ideas. Comments, suggestions, questions, improvements, everything and anything are welcome! Just let me know!

If you are interested in DIY analogue synthesizer modules you might be interested in this web page hosted on my old and slow personal web server: DIY Eurorack modules.

For fun I wanted to learn slightly more about Data-Driven Documents javascripts and SVG, so it ended up with this rather silly universe of colourful stars: SVG universe.

The color clock

I found this web page really fascinating, however I thought that there might be ways of improving or at least further extend the web page. As I did my changes to the original idea I simultaneously created a meta web page about the HTML, CSS, and JavaScript code I used for the web page.

Matlab code for playing around with photos

Automatic decide color tone of a photo

I had an idea that I wanted to read the overall color tone in a photo. This would be the first step in trying to automatically select a appropriate background music to a photo. The thought was that the hue or tint of the photo would reveal a red tint or a blue tint or something else, and that this then could be used to find music mimicking the emotional impression of the color tone, like major or minor scales.

Not very surprisingly I discovered problems I hadn’t considered. For example, that we humans do not interpret a color photo as a lot of dots in different colors, but rather on color of the content or the motive of the photo. So, the green grass and some yellowish sand with a red ball on, is not as red as my mind think it is, but rather a dull gray nuance. But after some tweaking I managed to get a overall color tone that is quite ok. Maybe not perfect, but quite ok.

I divided the color information in the three basic colors: Red, Green, Blue. And then mapped these to black and white channels to determine which color channel that had the most information. Then I weighted the color information differently. I took the mean of the color channel with most information, and then weighted the other two color channels in relation to the color channel with most information.

The image to the right shows four photos surrounded of the color tone for each of the photos.

Determine the activity in a photo

The next step to be able to select a matching background music for a photo would be to check the activity or the animation in the photo. My idea to do this was to count contrasting areas and the number of unique colors. This could then be used to select music with appropriate tempo and meter, as a color with less number of contrasting areas might be less stressful to look at.

Furthermore, the average lightness in the image could also, to some degree, reflect the frequency range in the music. With these parameters music could be selected from:

- - a mood point of view i.e. the hue or tint of the photo, resulting in key and major/minor parameters for the music

- - an activity point of view i.e. the number of unique colors and contrasting areas, resulting in tempo and meter parameters for the music

- - the lightness of the photo, resulting in frequency register parameters for the music

The image to the right shows one photo with the parameters for that specific photo given.

Create mosaic of a photo

As reading the hue or tint in a photo works quite well, I thought that this code could be used to create a mosaic like effect. This is done by dividing the photo in different boxes (the number of boxes can be specified). It is then possible to blend (or mix) the mosaic and the original photo, and the original photo can be applied as a color photo or as light information (gray scale). It is also possible to select how to mix the two images, either by adding (mixing) or by multiplying the images together.

Matlab code for playing around with sound

Automatic detect response time in audio files

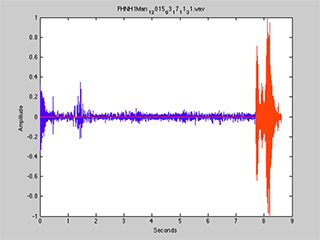

I got an interesting question and challenge from a friend. She's doing research in cognitive hearing science, and in her research she gets a lot of short audio files, probably more than a hundred short audio files for each participant. What she's interested in are the response times within each audio file, and she was measuring this by hand by marking (in an audio editing software) the first part of the audio file until the actual response occurred giving the response time in milliseconds. However, this is obviously a tedious and slow (not to mention deadly boring) task, so she asked me if this could be done automatically. And I accepted this challenge.

First I set a threshold to determine when an actual response started. This threshold was set to the RMS of the entire audio file. When the a sample was louder than the threshold the response time was measured from the start of the sound until this certain sample. As the sample sound files I got from my friend all contained more silent parts than speech, the RMS should be low enough to use as the threshold. However, a problem might arise as some speech sounds starts slow or indistinct, like "m" or "s". Therefore I set all samples during the response time to zero (0), and then plotted the measured sound on top of he original sound while letting Matlab play the measured sound. By doing this any poorly measured response time would show clearly. Yes, this means that you have to listen through all audio recordings, but at least the response time is automatically measured and exported to a text file. If one or a few of the measured response times aren't that accurate, you need to write down that file name and do the process by hand. Still, this method saves a lot of time.

This worked great until one sound file (I had gotten four sample sound files to work with) started with the participant clearing his throat and then waited for a while before giving his reply... Then I decided to divide the sound in a certain amount of windows, and then check if one sample exceeded the threshold within each window, and if so set the window value to one (1) otherwise the window value was set to zero (0). When all windows were checked there was an array such as: 0 0 1 1 0 0 0 1 0 0 0 1 1 1 1 1 1 1 1 0 0 0. Then I let Matlab check for the longest uninterrupted series of ones, back one window and set all samples before that window to zero (0), and then check for the first sample that had a higher value than the threshold as previously described. This method works well as long as the actual response is longer than any loud noise prior to it.

The end result works pretty well, even though there's still the need for manual corrections for some audio files. The code reads all audio files in a folder, displays the measured sound on top of the original sound while playing the measured sound, and then exports a text file with the file names and the measured response times in milliseconds. By tweaking and adjusting the threshold a better result might be obtained.

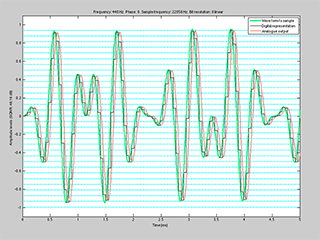

Tool to show sampling of an sound wave

I needed a way to easily show and explain sample frequency and bit resolution. This Matlab code creates a sinusoid at a certain (definable) frequency and samples it with a definable sample frequency with a selectable bit resolution. As I always say that the correct way to interpret the Nyquist sample theorem is that you need at leas (!) twice as high sample frequency as the frequency of the signal you want to sample, I added the possibility to phase shift the sinusoid to be able to illustrate the (at least theoretical) problem if the sampler only hits the zero crossings of the original sound.

There is also the possibility to use a more complex wave form created by three sinusoids. I also added a low pass filter function to mimic a digital to analogue converter. All wave forms are plotted: the original wave form, the digital representation, and the digital to analogue converted wave form. It is also possible play back the audio of the original wave form as well as of the the digital representation.

Wave forms

For a lecture in sound synthesis I needed an easy way to show different wave forms as well as the frequency content, the harmonics, of these wave forms. Furthermore, I wanted to be able to show, to some extent, the possibilities of wave shaping and sound processing when only one or two oscillators are used. I created a simple tool that can show:

- Three basic wave forms:

- Sinusoid

- Triangle wave adjustable between falling and rising sawtooth wave

- Square wave with adjustable duty cycle

- Mix of waveforms

- Distortion of the wave form(s)

- Sync between two oscillators

- Frequency modulation between two oscillators

- Ring modulation with two oscillators

The code isn't very neat, I just hate writing GUIs in Matlab! I've planned to add a filter section to this, but I'm not sure when I'll get around of writing that code. Another thing I would like to improve is realtime processing of the wave forms, but I'm not sure whether me nor Matlab are capable of that.

Normalizing of sounds

During my PhD student years, I needed to RMS equalize and normalize a bunch audio files. This code takes all audio files in a folder and equalizes these in amplitude according to the level of the sound file with the highest mean amplitude (RMS). Then all audio files are normalized to 75% of the sound level of the audio file with highest absolute sound level so no clipping occurs. This will give all sound files the same mean amplitude at rather high sound level without any clipping or saturation. However, this does not imply that the sounds are perceived as equally loud. Furthermore, all sound files are sinusoidally faded in the beginning and in the end with a fade time of 50ms. Finally, all edited audio files are stored in the same folder, but with new file names.